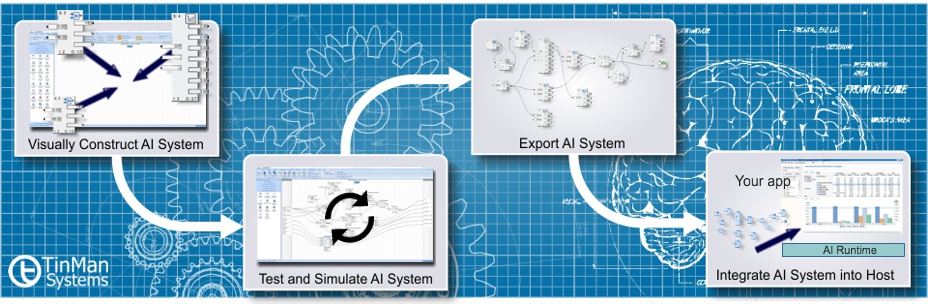

The process of building and deploying an AI system with TinMan AI Builder is comprised of four steps:

The first phase, or design phase, involves the selection, customization and attachment of a set of computational components to achieve the desired overall calculation / computation. Each of the 85 computational components can be added any number of times to the AI system, in series or in parallel, and each customized to suit the form of data processing necessary. The current set of computational components include abstracted mathematical and trigonometric components, pattern classification / recognition (including self-generating artificial neural networks), pattern matching, statistical and vector based algorithms. Each component has a distinct symbol and visual shape with default and custom dynamic inputs and outputs for intuitive representation of function and purpose - labeled verbosely along with the current computed value.

Connections are made at design time by dragging from component input to output and vise-versa. Any circular dependencies, feedback loops and recursive pathways are automatically addressed by the IDE and its underlying logic execution engine. Components are added to system modules, which can also be added without limit to an AI system. Modules can be added and connected to function in series and/or parallel.

Because each of these modules contains its own set of interconnected components, it is considered a complete sub-system, which can be exported for re-use in other AI systems, or even in the same AI system, if redundancy or replication of a set of processes is desired. As an example, if an AI system needed to process sensor information coming from each of the wheels on a 4-wheeled robotics device, a single module could be designed to support wheel sensors, and then that module would simply be replicated (copied) and applied on a one to one basis to each of the 4 sets of wheel sensors. These modules could then report their information to a second level process that interprets synchronicity and/or other feedback from the 4 wheels.

During the test and simulate phase, random data and/or data read directly from spreadsheets can be utilized to ensure functional accuracy, and structural efficiency. Once exported, the runtime API is used to load the AI system, populate its inputs, and execute cycles of logic. Ultimately, external data from the host environment is fed to (actually subscribed to) by the modules within the application, and the external outputs are the result of the AI system computation. At runtime, these outputs (system outputs) expose the resulting values from each cycle of execution. It is then up to the host environment to determine what to do with or about that data.

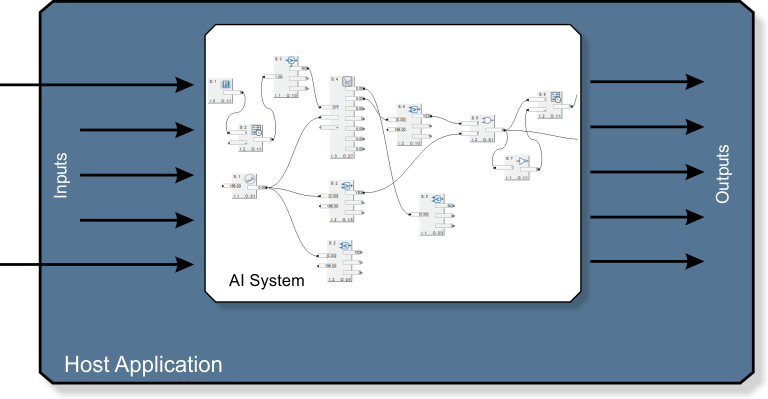

How it Works

Once an AI System has been constructed, it is exported to a file for integration with the host application. At runtime, the host application will collect information from its sensors and/or human interface components and present the desired set of data to the AI system for computation. The AI system performs its computation, exactly as it was designed to do in the IDE, and updates the values of all of its exposed outputs. The host application would then read these values (or value), and respond appropriately. Programming that response is the task of the host application developer, such as visual display of the information, authentication, classification or identification of a subject (as in a biometric application), movement or articulation of a robotic joint, presentation of a word or sentence, prediction of an event, or update of various sensitivity levels of the sensors themselves. The key point is that the AI system is presented information, processes information and presents the computed results to the host application.

The complete process of updating the input values, to the computation of the system, to the presentment of the output values, is considered a single 'cycle of execution or logic'. In some host environment configurations, this cycle of execution might be triggered manually by the press of a button or selection of a menu item (e.g. a medical application that performs a diagnosis based on inputs regarding patient history and symptoms). In other configurations, the computation might be done continuously, many times per second (e.g. a robotics device which maintains balance based on continuous information from its gyro and pressure sensors 20-100 times per second). In either case, computation is the production of output information from the processing of input data.

The runtime engine is made available in the form of a Windows Dynamic Link Library (DLL), and is accessed and operated by the host application via straight-forward C API calls. Other forms are available. At runtime, the host application typically would perform 5 operations via this API: one-time load of the desired exported AI system file, a setting of the input values prior to execution, execution, extracting of resulting output values and finally a one-time unload of the exported AI system file. The setting and reading of input and output values can be optimized by utilizing the interface pointers to actual internal AI inputs and outputs.

Platform Support

TinMan Systems technology foundation is currently offered in both PC and web-based platforms. Our PC based product is AI Builder. Our web-based platform is primarily provided via professional services. Our in-house tools enable us to build up a web-based AI system for hosting and access via web-services on TinMan web servers, or depending on project type, we can install the functional code on designated customer servers.

Some Example Host Implementations of AI Systems